University Student Readiness and Academic Integrity in Using ChatGPT and AI Tools for Assessments.

Chuah, K.M. and Sumintono, B. (2024). University Student Readiness and Academic Integrity in Using ChatGPT and AI Tools for Assessments. In: Grosseck, G., Sava, S., Ion, G. and Malita, L. (eds). Digital Assessment in Higher Education, Navigating and Researching Challenges and Opportunities. https://link.springer.com/chapter/10.1007/978-981-97-6136-4_4

Chuah, K.M. and Sumintono, B. (2024). University Student Readiness and Academic Integrity in Using ChatGPT and AI Tools for Assessments. In: Grosseck, G., Sava, S., Ion, G. and Malita, L. (eds). Digital Assessment in Higher Education, Navigating and Researching Challenges and Opportunities. https://link.springer.com/chapter/10.1007/978-981-97-6136-4_4

Abstract

This chapter explores the use of ChatGPT for academic support in higher education, concentrating on students’ readiness, perception of its usefulness, and understanding of academic integrity issues. A quantitative approach with a non-experimental design was employed. The study involved 374 university students as participants, with data cleaning and validation carried out using WINSTEPS software and further analysis was conducted using Rasch Rating Scale Model. The key findings highlight varied levels of student readiness and awareness concerning the use of ChatGPT and adherence to academic integrity. The study points to the urgent need for universities to provide more explicit guidelines on using artificial intelligence (AI) tools within academic contexts. The results reveal that while students see the potential benefits of ChatGPT in aiding their studies, many lack full preparedness for its incorporation into their academic routines. The analysis of variance indicated significant differences in the readiness and perceived usefulness of ChatGPT among students based on the frequency of its usage. However, there were no significant differences in terms of academic integrity across different demographic groups. This research contributes to the understanding of how students perceive and engage with AI tools in higher education. It also provides insights on the future of digital assessments in higher education, particularly how AI tools like ChatGPT might reshape assessment methods, particularly in upholding academic integrity and honesty.

Longitudinal Study of School Climate Instrument with Secondary School Students: Validity and Reliability Analysis with the Rasch Model

Zynuddin, S.N. & Sumintono, B. (2024).Longitudinal Study of School Climate Instrument with Secondary School Students: Validity and Reliability Analysis with the Rasch Model. Malaysian Online Journal of Educational Management, 12(4), 24-41. https://mojem.um.edu.my/index.php/MOJEM/article/view/55629

Abstract

The school climate plays a pivotal role in students’ outcomes. Previous literature has highlighted several methodological approaches employed in the school climate domain, including longitudinal studies. However, little is known about the validity and reliability of school climate instruments for longitudinal studies using Rasch analysis. Rasch model is a powerful approach to validate assessment on both item and test levels. Rasch model is coined from the probability of each response and includes item difficulty parameters to characterize the measured items. Moreover, the score represents the item and the person involved with the assessment. Thus, the current study aimed to validate school climate instruments for longitudinal studies with a six-month gap within the context of secondary school students by utilising Rasch analysis. This study evaluated aspects of reliability and validity, such as unidimensionality, rating scale analysis, item fit statistics, item targeting, and differential item functioning. A total of 1,495 secondary school students from public schools in Selangor, Malaysia, completed a 28-item Malay version of the school climate survey at Time-1 and Time-2, with a six-month gap. The results of the Rasch analysis indicated that the instrument had excellent reliability and separation indices, excellent unidimensionality and construct validity, a functional rating scale, good item-person targeting, and good item fit statistics. The current findings provided valid and reliable insights pertinent for policymakers to strategise interventions and initiatives to enhance the quality of school climate and overall education, particularly in the Asian context.

Validation of the Indonesian version of the psychological capital questionnaire (PCQ) in higher education: a Rasch analysis

Ratnaningsih, I.Z., Prihatsanti, U., Prasetyo, A.R. and Sumintono, B. (2024), "Validation of the Indonesian version of the psychological capital questionnaire (PCQ) in higher education: a Rasch analysis", Journal of Applied Research in Higher Education, https://doi.org/10.1108/JARHE-10-2023-0480

Abstract

Purpose – The present study aimed to validate the Indonesian-language version of the psychological capital

questionnaire (PCQ), specifically within the context of higher education, by utilising Rasch analysis to

evaluate the reliability and validity aspect such as item-fit statistics, rating scale function, and differential item

functioning of the instrument. These questionnaires are designed to assess students’ initial psychological

status, aiming to ease their transition from school to university and monitor undergraduate students’ mental

health.

Design/methodology/approach – A total of 1,012 undergraduate students (female 5 61.2%;

male 5 38.8%) from a university in Central Java, Indonesia completed the 24-item Indonesian version of

the PCQ. The sampling technique used is quota sampling. Data were analysed using The Rasch model

analysis, it was performed using the Winsteps 3.73 software.

Findings – The results of the Rasch analysis indicated that the reliability of the instrument was good

(a50.80), item quality was excellent (1.00), and person reliability was consistent (0.77). In the validity aspect,

all four domains of the PCQ exhibited unidimensionality, and a rating scale with four answer choices was

deemed appropriate. The study also identifies item difficulty level in each dimension.

Practical implications – The practical implications of this study are beneficial for higher education

institutions. They can use the validated Indonesian version of the PCQ to monitor the mental well-being of

undergraduate students. Mapping the PsyCap can serve as a basis for developing and determining learning

policies, potentially leading to improvements in student academic performance. The theoretical implications

of this study are related to the advancement of measurement theory. By employing Rasch analysis, the study

contributes to enhancing the validity and reliability of measurement, particularly in the context of educational

and psychological assessment in Indonesia.

Originality/value – This current study confirmed that the Indonesian version of PCQ adequately measures

psychological capital in higher education, particularly in the Indonesian context.

Keywords Psychological capital, Rasch analysis, Undergraduate student, Validation, Indonesia

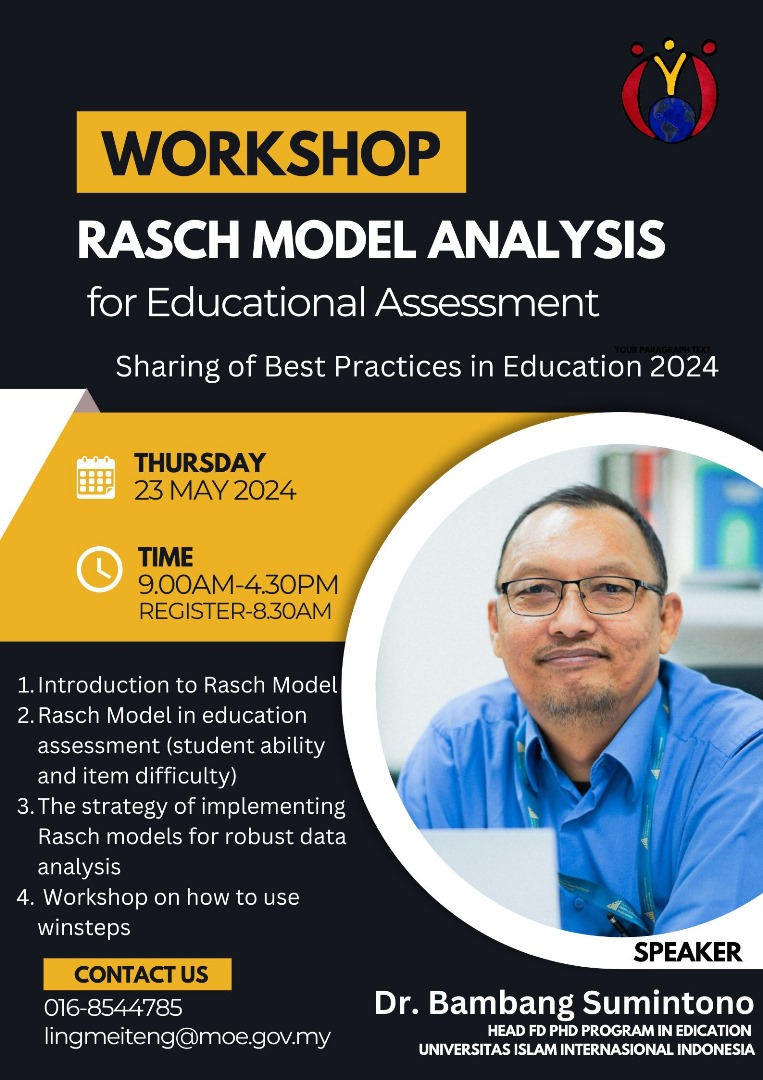

FoE UIII's lecturer provided workshop about Rasch Model measurement for teachers in Miri, Sarawak, Malaysia

The Rasch model is a powerful tool in cognitive testing, transforming raw scores into interval measures, which allows for more precise and meaningful interpretations of test results. On Thursday 23 May 2024, Dr Bambang, one of the lecturer in Faculty of Education UIII, provided whole day workshop for teachers in Miri, Sarawak, Malaysia. The workshop introduces the fundamentals of the Rasch model, focusing on its application in cognitive assessment, particularly about instrument analysis as well as person analysis. Participants learned how the model ensures that test items are appropriate for different ability levels, providing a fair and accurate measure of cognitive skills. Through hands-on sessions, attendees will practice calibrating test items and interpreting Rasch output using relevant software. Key topics include item difficulty, person ability estimation, and the creation of invariant measurement scales. The workshop is designed especially for teachers to enhance their understanding of test adn item development, and data analysis in cognitive testing. By the end, workshop participants equipped with the skills to apply the Rasch model to improve the reliability and validity of cognitive assessments in their respective teaching subject.

The Rasch model is a powerful tool in cognitive testing, transforming raw scores into interval measures, which allows for more precise and meaningful interpretations of test results. On Thursday 23 May 2024, Dr Bambang, one of the lecturer in Faculty of Education UIII, provided whole day workshop for teachers in Miri, Sarawak, Malaysia. The workshop introduces the fundamentals of the Rasch model, focusing on its application in cognitive assessment, particularly about instrument analysis as well as person analysis. Participants learned how the model ensures that test items are appropriate for different ability levels, providing a fair and accurate measure of cognitive skills. Through hands-on sessions, attendees will practice calibrating test items and interpreting Rasch output using relevant software. Key topics include item difficulty, person ability estimation, and the creation of invariant measurement scales. The workshop is designed especially for teachers to enhance their understanding of test adn item development, and data analysis in cognitive testing. By the end, workshop participants equipped with the skills to apply the Rasch model to improve the reliability and validity of cognitive assessments in their respective teaching subject.

Profile of teacher leaders in an Indonesian school context: How the teachers perceive themselves

Hariri, H., Mukhlis, H., & Sumintono, B. (2023). Profile of teacher leaders in an Indonesian school context: How the teachers perceive themselves. Asia Pacific Journal of Educators and Education, 38(2), 67–87. https://doi.org/10.21315/apjee2023.38.2.5

A Rasch analysis of the International Personality Item Pool Big Five Markers Questionnaire: Is longer better?

Akhtar, H., & Sumintono, B. (2023). A Rasch analysis of the International Personality Item Pool Big Five Markers Questionnaire: Is longer better?. Primenjena Psihologija, 16(1), 3-28. https://doi.org/10.19090/pp.v16i1.2401

Akhtar, H., & Sumintono, B. (2023). A Rasch analysis of the International Personality Item Pool Big Five Markers Questionnaire: Is longer better?. Primenjena Psihologija, 16(1), 3-28. https://doi.org/10.19090/pp.v16i1.2401

Abstract

The 50-item International Personality Item Pool version of the Big Five Markers (IPIP-BFM) is an open-source and widely used measure of the big five personality traits. A short version of this measure (IPIP-BFM-25) has been developed using the classical test theory approach. No study was performed to examine the psychometric properties of a longer and shorter version of IPIP-BFM Indonesia using modern test theory. This study aimed to evaluate the psychometric properties of the Indonesian version of IPIP-BFM as well as IPIP-BFM-25 using Rasch analysis. The analysis was conducted in order to test their dimensionality, rating scale functioning, item properties, person responses, targeting, reliability, and item bias on 1003 Indonesian samples. The findings showed that both IPIP-BFM and IPIP-BFM-25 Indonesia have some adequate psychometric properties, especially regarding category function, item properties, reliability, and item bias. However, the emotional stability and intellect scales did not meet the assumption of unidimensionality, and all items on the scales were too easy to endorse by participants. In general, longer measures outperformed shorter measures in terms of person separation and reliability. Further testing and refinement must be conducted.

Analisis Kesahan Kandungan Instrumen Kompetensi Guru untuk Melaksanakan Pentaksiran Bilik Darjah Menggunakan Model Rasch Pelbagai Faset.

Mohamat, R., Sumintono, B. and Abd Hamid, H.S. (2022). Analisis Kesahan Kandungan Instrumen Kompetensi Guru untuk Melaksanakan Pentaksiran Bilik Darjah Menggunakan Model Rasch Pelbagai Faset (Content Validity Analysis of an Instrument to Measure Teacher’s Competency for Classroom Assessment Using Many Facet Rasch Model). Jurnal Pendidikan Malaysia, 47(1), 1-14. DOI: http://dx.doi.org/10.17576/JPEN-2022-47.01-01

Mohamat, R., Sumintono, B. and Abd Hamid, H.S. (2022). Analisis Kesahan Kandungan Instrumen Kompetensi Guru untuk Melaksanakan Pentaksiran Bilik Darjah Menggunakan Model Rasch Pelbagai Faset (Content Validity Analysis of an Instrument to Measure Teacher’s Competency for Classroom Assessment Using Many Facet Rasch Model). Jurnal Pendidikan Malaysia, 47(1), 1-14. DOI: http://dx.doi.org/10.17576/JPEN-2022-47.01-01

Abstract

Kesahan kandungan instrument adalah penting bagi memastikan item-item yang dibina berupaya mengukur perkara yang sepatutnya diukur dan membincangkan sejauh mana item mewakili kandungan yang dimaksudkan. Kajian ini bertujuan menguji kesahan kandungan Instrumen Kompetensi Guru untuk Melaksanakan Pentaksiran Bilik Darjah (IkomGuruPBD) dengan menggunakan analisis Model Rasch Pelbagai Faset (MRPF). IkomGuruPBD terdiri daripada 56 item yang dibina berdasarkan 3 konstruk utama iaitu Pengetahuan PBD, Kemahiran PBD dan Sikap terhadap PBD. Reka bentuk kajian adalah kaedah tinjauan kuantitatif dengan pendekatan penilai berganda (multirater) menggunakan soal selidik IkomGuruPBD yang telah diedarkan kepada 12 orang panel pakar. Setiap pakar perlu menentukan tahap persetujuan mereka tentang kesesuaian setiap item dengan menggunakan skala Likert 3 mata terhadap tiga aspek penilaian bagi setiap item. Dapatan menunjukkan antara kelebihan MRPF adalah boleh menentukan tahap ketegasan penilai dan konsistensi penilai, juga mendapati dapatan respon luar jangkaan. Walaupun setiap penilai diberi instrumen, aspek penilaian dan kategori skala yang sama, namun MRPF boleh membandingkan tahap ketegasan secara individu bagi setiap penilai. MRPF juga boleh mengesan kualiti item untuk membantu pengkaji mengenal pasti item yang baik dan item yang lemah untuk ditambah baik. MRPF mempunyai kelebihan untuk memberikan maklumat yang lengkap mengenai ciri psikometrik bagi IkomGuruPBD yang diuji.

Instrument’s content validity is important to ensure that the items constructed are able to measure what they should measure and discuss the extent to which the items represent the intended content. This study examined the content validity of an instrument to measure teacher’s competency for classroom assessment (IkomGuruPBD) by using the analysis of Many-Facet Rasch Model (MFRM). IkomGuruPBD consists of 56 items built based on 3 main constructs: knowledge in PBD, skills in PBD and attitude towards PBD. This research is a quantitative method with a multirater approach using IkomGuruPBD questionnaire distributed to 12 expert panels. Each expert determined their level of agreement about the suitability of each item by using a 3-point Likert scale on the three aspects of evaluation. Results show that MFRM can determine the severity and consistency level of the raters, also report findings of unexpected bias response. Although all raters were given the same instrument, the same aspects of evaluation and scale category, MFRM has the ability to compare the severity level for each rater individually. Furthermore, MFRM can also detect the quality of the item and help the researcher to identify good items and weak items to be improved. MFRM has the advantage of providing complete information on the psychometric characteristics of the IkomGuruPBD being tested.

Measuring Changes in Hydrolysis Concept of Students Taught by Inquiry Model: Stacking and Racking Analysis Techniques in Rasch Model

Laliyo, L.A.R., Sumintono, B. and Panigoro, C. (2022). Measuring Changes in Hydrolysis Concept of Students Taught by Inquiry Model: Stacking and Racking Analysis Techniques in Rasch Model, HELIYON, 8(3) : e09126 https://doi.org/10.1016/j.heliyon.2022.e09126.

Laliyo, L.A.R., Sumintono, B. and Panigoro, C. (2022). Measuring Changes in Hydrolysis Concept of Students Taught by Inquiry Model: Stacking and Racking Analysis Techniques in Rasch Model, HELIYON, 8(3) : e09126 https://doi.org/10.1016/j.heliyon.2022.e09126.

A B S T R A C T

This research aimed to employ stacking and racking analysis techniques in the Rasch model to measure the hydrolysis conceptual changes of students taught by the process-oriented guided inquiry learning (POGIL) model in the context of socio-scientific issues (SSI) with the pretest-posttest control group design. Such techniques were based on a person- and item-centered statistic to determine how students and items changed during interventions. Eleventh-grade students in one of the top-ranked senior high schools in the eastern part of Indonesia were involved as the participants. They provided written responses (pre- and post-test) to 15 three-tier multiple-choice items. Their responses were assessed through a rubric that combines diagnostic measurement and certainty of response index. Moreover, the data were analyzed following the Rasch Partial Credit Model, using the WINSTEPS 4.5.5 software. The results suggested that students in the experimental group taught by the POGIL approach in the SSI context had better positive conceptual changes than those in the control class learning with a conventional approach. Along with the intervention effect, in certain cases, it was found that positive conceptual changes were possibly due to student guessing, which happened to be correct (lucky guess), and cheating. In other cases, students who experienced negative conceptual changes may respond incorrectly due to carelessness, the boredom of problem-solving, or misconception. Such findings have also proven that some students tend to give specific responses after the intervention in certain items, indicating that not all students fit the intervention. Besides, stacking and racking analyses are highly significant in detailing every change in students’ abilities, item difficulty levels, and learning progress.

Online Mental Health Survey for Addressing Psychosocial Condition During the COVID-19 Pandemic in Indonesia: Instrument Evaluation.

Sunjaya, D.K., Sumintono, B., Gunawan, E., Herawati, D.M.D., and Hidayat, T. (2022). Online Mental Health Survey for Addressing Psychosocial Condition During the COVID-19 Pandemic in Indonesia: Instrument Evaluation. Psychology Research and Behavior Management, 15, pp.161-170. https://doi.org/10.2147/PRBM.S347386

Sunjaya, D.K., Sumintono, B., Gunawan, E., Herawati, D.M.D., and Hidayat, T. (2022). Online Mental Health Survey for Addressing Psychosocial Condition During the COVID-19 Pandemic in Indonesia: Instrument Evaluation. Psychology Research and Behavior Management, 15, pp.161-170. https://doi.org/10.2147/PRBM.S347386

Background: Regular monitoring of the pandemic’s psychosocial impact could be conducted among the community but is limited through online media. This study aims to evaluate the self-rating questionnaire commonly used for online monitoring of the psychosocial

implications of the coronavirus disease 2019 (COVID-19) pandemic.

Methods: The data were taken from the online assessment results of two groups, with a total of 765 participants. The instruments studied were Self-Rating Questionnaire (SRQ-20), posttraumatic stress disorder (PTSD), and Center for Epidemiological Studies Depression Scale-

10 (CESD-10), used in the online assessment. Data analysis used Rasch modeling and Winsteps applications. Validity and reliability were tested, and data were fit with the model, rating scale, and item fit analysis.

Results: All the scales for outfit mean square (MnSq) were very close to the ideal value of 1.0, and the Chi-square test was significant. Item reliability was greater than 0.67, item separation was greater than 3, and Cronbach’s alpha was greater than 0.60; all the instruments

were considered very good. The raw variance explained by measures for the SRQ-20, PTSD, and CESD-10 was 30.7%, 41.6%, and 47.6%, respectively. The unexplained eigenvalue variances in the first contrast were 2.3, 1.6, and 2.0 for the SRQ-20, PTSD, and CESD-

10, respectively. All items had positive point-measure correlations.

Conclusion: The internal consistency of all the instruments was reliable. Data were fit to the model as the items were productive for measurement and had a reasonable prediction. All the scales are functionally one-dimensional.